Earlier this week I went to a talk by Professor David Nutt entitled ‘Time to Grow Up?’ where he spoke about the damage caused by the UK government’s approach towards drugs. David Nutt has become quite well known in the UK after he was fired in 2009 by the Home Secretary as the chairman of the Advisory Council on the Misuse of Drugs for clashing with government policy. Most notably, he published an editorial in the Journal of Psychopharmacology in which he said that the dangers from ecstasy were comparable to that of horse-riding.

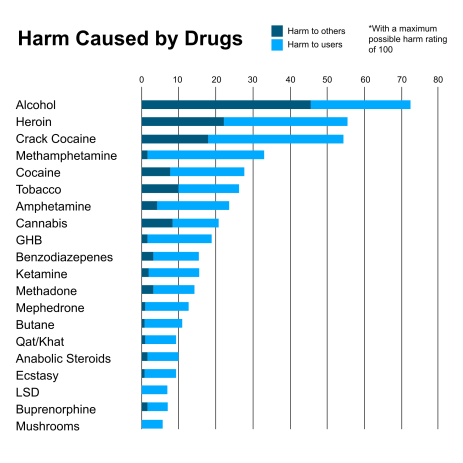

I was already well aware of his views on the relative risks of drugs (alcohol causes far more damage overall than anything else) and his views on the government’s approach to banning new drugs (such as mephedrone, which potentially saved more lives than it took). He laid out the evidence very clearly and presented it in a very engaging manner – I would recommend checking out his blog if you’re interested.

When adding together harm to self and harm to others, alcohol tops the list of most harmful drugs. Image credit: The Economist

However, one aspect really caught my attention – the study of illegal drugs as therapeutics or medicine. The case for cannabis is well-known: its potency as a pain reliever has led to it now being prescribed by doctors in some countries. But what about MDMA (ecstasy) used to treat post-traumatic stress disorder, or psilocybin (the hallucinogen found in magic mushrooms) used to treat depression or obsessive-compulsive disorder? These drugs have been suggested as potential treatments but there are so few studies conducted that we can’t be sure.

Science on drugs

Cannabis, MDMA and psilocybin are all considered have no therapeutic value by the UK government and so are listed as Schedule 1 drugs. This means that not only are researchers required to get a licence from the Home Office, but they also have to find a supplier who also has the correct licence. These licences cost thousands of pounds, take about a year to be approved and then result in police inspections on a regular basis. Not only that, many research funding bodies are reluctant to sponsor studies on illegal drugs or refuse to fund it altogether.

It is easy to see why there hasn’t been much research done.

Nonetheless, David Nutt made a big splash two months ago when he televised a study on the neurological effects of MDMA. In Drugs Live: The Ecstasy Trial, 25 volunteers were studied by fMRI after taking either the drug or a placebo in a double-blind trial – making it the largest ever brain-imaging study of MDMA conducted. In time, the full results will be published in peer-reviewed journals and they are actually expecting to publish 5-6 papers from this single study.

This research was not just to look at the effects of taking an illegal drug – understanding the neurological mechanism of MDMA could potentially help millions. Back in 2010, a US study of patients with post-traumatic stress disorder showed that MDMA, combined with psychotherapy, cured 10 out of the 12 people given the drug in a randomized-control trial. These were people who had not responded to government-approved drugs or psychotherapy alone, and two months after the study they were free of symptoms. MDMA appears to dampen negative emotions, which allows a patient to revisit their traumatic memories without the associated emotional pain. This can be the starting point for the patient to come to terms with their trauma and deal it with through therapy. These results, although from a very small sample size, are near miraculous and yet it took over ten years for the researchers to get approval.

The magic of mushrooms

David Nutt has also previously studied the effects of psilocybin on the brain and the results were surprising. Psilocybin is a hallucinogen, causing colours, sounds and memories to appear much more vivid than usual. With this kind of effect it was expected that psilocybin would activate certain areas of brain, which would be seen as bright patches on an fMRI scan. However, they actually saw dark blue patches of decreased brain activity, but only in a specific area of the brain that acts as a central hub for connections.

Psilocybin is the hallucinogen in magic mushrooms. Like MDMA, it is a class A drug in the UK but could it used to treat depression?

Decreasing brain activity doesn’t sound like a good thing, but one of these connector hubs, known as the posterior cingulate cortex, is over-active in people with depression. This area is responsible for several roles but can cause anxiety, particularly when it is over-active. When David Nutt and his colleagues asked people on psilocybin to think of a happy memory, they found that the volunteers could remember happy memories more vividly – almost as if they were reliving them. These test subjects were also much happier after recalling positive memories. Dampening the activity in the connector hubs and getting a boost from recalling happier times could be enough to get people out of the vicious circle of depression. Fortunately, the Medical Research Council has since funded David Nutt to conduct further studies into the use of psilocybin as a treatment for depression.

Post-traumatic stress disorder and depression are two of the biggest mental health issues that we face and current treatments are plainly inadequate. It seems ridiculous that the government’s policies on drugs prevent scientific studies aiming to reap benefits from them. These treatments show good potential and yet little is being done to take advantage of them. This situation is nothing new – after being fêted as a treatment for alcoholism in the 1960s, LSD has largely been ignored ever since it was declared illegal. Even though, according to David Nutt, it is probably as good at treating alcoholism as anything we’ve got now, researchers are limited to reanalysing studies completed 50 years ago.

What treatments are out there, undiscovered because of governments’ heavy-handed approaches towards recreational drugs? I’m just glad that there are researchers like David Nutt who are willing to make the effort, and take the flak, in order to find out.